What is AI?

To many people, AI is typing sentences into ChatGPT, prompting it to converse with you, and helping you with tasks. Others may point out the AI/ML underpinnings of the algorithms that serve us content across the internet, or an AI Overview taking the place of traditional Google search results.

But increasingly, AI is digital employees, building and working on behalf of companies. You may have heard them referred to as AI agents.

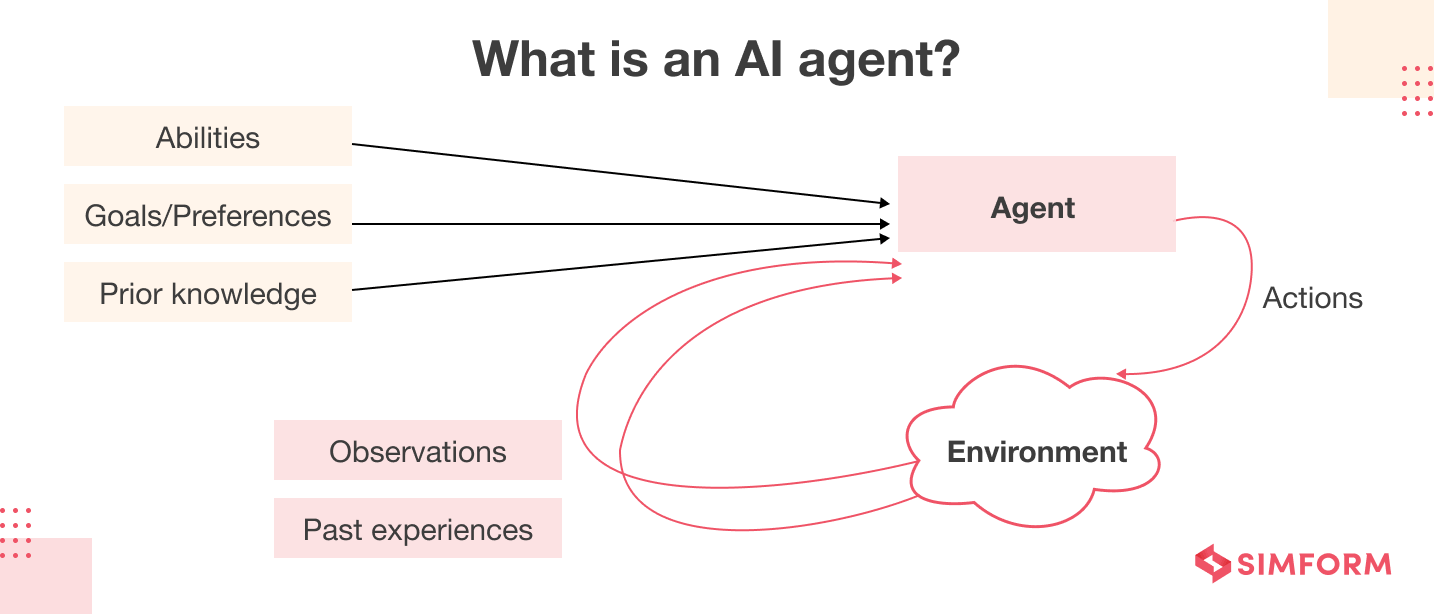

An AI agent is a software application that uses Large Langauge Models (LLMs) to perform specific tasks autonomously. These agents make contextually relevant actions based on sensory input, whether in physical, virtual, or mixed reality environments.

AI agents are designed to perceive their environments, make decisions, and take actions to achieve specific goals. Unlike your interactions with Claude or ChatGPT, they don’t require continual prompting—once set up, they solve the problem they’re built for.

Agents are problem-solving machines, and increasingly they’re becoming digital employees, with the potential to further shift job market dynamics.

AI’s evolving labor impacts

We’re already seeing AI impact labor considerations for major corporations: Amazon CEO Andy Jassy claims their GenAI code assistance Amazon Q has already saved the company 4,500 developer-years of work in foundational code modernization efforts.

Yet many software engineers see GenAI code assistants as solving the wrong problem. Instead of unblocking workflow challenges and crowded calendars that keep engineers from programming, code assistants speed up code creation, the part of their job many developers enjoy the most.

Yes, there is value in accelerating the tedious but critical development tasks of updating foundational software or writing tests. However, that doesn’t change the fact that many engineers don’t think coding assistants work well enough yet. While they hear engineering executives say that this will give teams time for new, innovative feature work, for many engineers, AI is an irritant and a source of stress about a rapidly changing future.

Will I lose my job? Am I being replaced? Many of us have had such thoughts. With Gartner predicting that enterprise usage of Generative AI tools to develop, test, and operate software will 10x by 2027, many engineers are anxious about what the future of software development looks like.

For much more on this topic, listen to my conversation with Amir Behbehani, Founder and Chief AI Engineer @ memra 👇

Too much momentum

The angst around AI’s current effectiveness may hinder some AI transformation efforts, but it won’t stop them. From Amazon’s example alone, the efficiency gains in enhanced security and reduced infrastructure costs are estimated to save the company $260M annually. These improvements can be foundational—when I spoke with GitHub's then Deputy CSO, Jacob DePriest, he highlighted that the secure-by-design approach of integrating security filtering into AI code assistants like GitHub’s Copilot can catch and weed out potential vulnerabilities before they emerge.

There’s too much potential upside for enterprises to not invest in GenAI tooling, let alone ignore the opportunity to put their own GenAI applications into production. They can’t risk being left behind.

And agents are a clear next step in AI infrastructure. Yes, it’s great to enhance your team’s productivity: but when you can create tireless digital employees who don’t sleep, don’t eat, and continually solve problems for you—with infrastructure costs but no salary? That’s an entirely new paradigm.

Unlike traditional robotic process automation (RPA), which operates on a set of predefined rules, Agentic AI works with knowledge and context, making real-time decisions and adapting dynamically. This allows agents to go beyond automating workflows and become part of an organization’s operational fabric, executing more than just repetitive tasks.

From deterministic to non-deterministic code

Automation was already a hot topic in software development before AI agent capabilities evolved, with one study finding that over the last two years alone bot-created Pull Requests (PRs) in key open-source repos surged from 5% to 15% . LinearB’s research further found that 13.3% of all pull requests in their data set were bot-created, highlighting the increasing reliance on automation to scale software development.

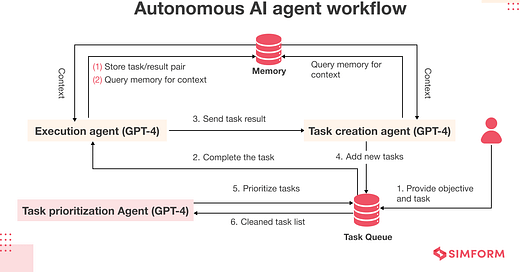

However, these traditional software bots rely on deterministic code—where given inputs always produce predictable outputs. Rather than executing static code, AI agents interact with dynamic environments, making decisions based on a range of factors, including real-time data and historical context. Agents differentiate by leveraging both long-term memory (such as vector or graph databases) to store knowledge over extended periods, and short-term memory which allows them to adapt in real-time to changes in their environment. This memory system provides the ability to carry out complex, multi-step tasks that require ongoing contextual awareness.

This shift challenges the way engineers approach software development, moving from manual coding tasks to managing agents that continuously improve and adapt to changing environments. And software development will hardly be the last area impacted.

Rapidly addressing ‘black box’ concerns

While the promise of Agentic AI is substantial, it comes with challenges, particularly around security and transparency. LLM decision-making processes are often opaque. However, most AI agents are designed to maintain real-time auditing and traceability. By caching their reasoning processes, these agents provide an auditable trail of decisions, ensuring that organizations can maintain oversight and accountability.

There are other important considerations—LLMs have biases just as humans do but once again, the train has left the station—if a company can code a tireless, problem-solving digital employee, they will. Agents for software development, customer support, sales, and much more are popping up all over.

Trust + safety

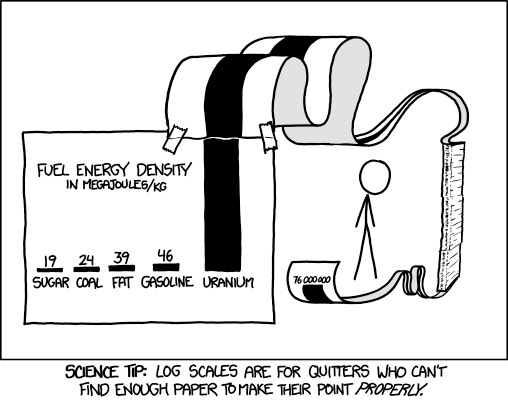

We are at a turning point. While there are valid concerns about AI’s water and energy usage, the technology is too promising for builders to ignore. Pandora’s box is open, and we must deal with the consequences—we should be encouraged to see nuclear power rapidly gaining support, given its incredible power generation ratio and how safe it is compared to sources like oil and gas. Nuclear, like AI, has been the victim of bad press—for nuclear, it was fossil fuel company propaganda, for AI it was science fiction.

From simple task automation to full-scale digital employees, AI is transforming how code is written, reviewed, and integrated. AI agents are rapidly being integrated into enterprise workflows, in software, CS, sales, and beyond.

But there is a risk. Just like a human may lie, or misremember, AI can hallucinate, providing incorrect or misleading results. As agents and other AI applications are increasingly put into production worldwide, we need a trust and safety layer for AI that gives engineers visibility into compound AI systems, lets us measure success, and enables evaluations and iteration to improve AI applications—and eliminate hallucinations.

My new job

These are the exact problems Galileo is solving with its Evaluation Intelligence Platform, and it’s why last month I joined Galileo as Head of Developer Awareness.

AI has only begun to reshape the world, more changes are coming whether you want them or not. Companies will continue to try to build digital employees. It may upend the entire labor market entirely. To backstop those changes, we need effective AI evaluations to be a core principle of this technological transformation, and of how we build AI.

So that’s what I’ll be working on for the next couple of years, a thorny, but important problem. If you’ve got ideas, I’d love to hear from you.

What else have I been up to?

Given that it’s been a year since my last edition of this newsletter—👋 that reminds me, welcome new readers!—I want to share a couple highlights from this past year that folks may enjoy.

#1? Penny

That’s right, Katherine and I added a third cat! She’s stolen our hearts, and been a deep distraction from me sitting down to write 😅

Leaving Dev Interrupted

On the podcasting side, I recently left my role as Host of

to move to Galileo. However, I’m still appearing in a couple more recorded episodes through the end of this fourth season. Here are three of the DI articles and episodes I’m particularly proud of from this past year:Thanks for tuning in for another edition of Test Lab + let me know what you thought by leaving a comment, it’s always great to hear from readers.

Hope you’re well,

Conor